“If it’s really a revolution, it doesn’t take us from point A to point B, it takes us from point A to chaos.

– Clay Shirky, 2005

In 2019, we reel from a series of improbable outcomes. Whether it’s the 2016 US election, Brexit, the resurgence of the theory that the earth is flat, or the decline of vaccination, turns which once seemed unthinkable have arrived in force. Culture war blossoms around developments which some see as progress, and others find threatening and absurd. This coincides with the rise of centralized communication platforms that reward compulsive engagement, indiscriminately amplifying the reach of compelling messages—without regard for accuracy or impact.

Historically, composition and distribution of information took significant effort on the part of the message’s sender. Today, the cost of information transfer has collapsed. As a result, the burden of communication has shifted off the sender and onto the receiver.

Interconnectedness via communications technology is helping to change social norms before our eyes, at a frame rate we can’t adjust to. If we want to understand why we’ve gone from point A to chaos, we need to start by examining what happens when the cost of communicating with each other falls through the floor.

A brief history of one-to-one communication

The year 1787 offers us another moment in time when the communications technology of the day stood to influence the direction of history. The Constitutional Convention was underway, and the former British colonies were voting on whether to adopt the hotly contested new form of government. Keenly aware that news of how each state fell would influence the behavior of those who had yet to cast their votes, Alexander Hamilton assured James Madison, his primary collaborator at the time, that he would pay the cost of fast riders to move letters between New York and Virginia, should either one ratify the Constitution.

The constraints of communications technology back then meant it was possible for an event to occur in one location without people elsewhere hearing of it for days or weeks on end. For this single piece of information to be worth the cost of transit between Hamilton and Madison, nothing less than the future of the new republic had to be at stake.

From a logistical standpoint, moving information from one place to another required paper, ink, wax, a rider, and a horse. Latency was measured in days. Hamilton and Madison’s communications likely benefited from the postal system, which emerged in 1775, to provide convenient, affordable courier service. Latency was still measured in days, but by then it was possible to batch efforts and share labor costs with other citizens.

Around 1830, messages grew faster, if not cheaper, with the advent of the electric telegraph. The telegraph allowed transmission of information across cities and eventually continents, with latency clocking in at a rate of two minutes per character. The sender of a message was charged by the letter, and an operator was needed at each end to transcribe, transmit, receive and deliver the message.

With the telephone, in 1876, it became possible to hold an object to your ear and hear a human voice transmitted in real time. The telephone required an operator to initiate the circuit needed for each conversation, and once they did, back-and-forth could unfold without intermediaries. This dramatic acceleration from letters carried on horseback to the the telephone took place in the space of 89 years. By the early 20th century, phone switching was automated, further reducing the cost of information exchange.

By the 2000’s, mobile phones and the internet enabled email and texting, and instantaneous communication was within reach between anyone in the world who was lucky enough to have access to these technologies in their early days on the consumer market. For these, no additional labor is needed beyond the sender’s composition of the message and the receiver’s consideration of its contents. Automation handles encoding, transmission, relaying, delivery and storage of the whole thing. The time between a message being written and a message being received has been reduced to mere seconds.

Constraints to communication at this phase come to be dictated by access to technology, rather than access to labor.

A brief history of one-to-many communication

While Alexander Hamilton wrote copious personal letters, he also leveraged the mass communications medium of his day, the press, to shape political dialogue. He was responsible for 51 of the 85 essays published in the Federalist Papers. By 1787, publishing had already benefited from the invention of the printing press. The production of written word was no longer rate-limited by the capacity of scribes or clergy, or restricted by the church. Those who were educated enough to write, and connected enough to publish, could do so. Of course, only a select handful of people in the early United States met that criteria.

It’s not that fake news is a recent phenomenon, it’s that you used to need special access to distribute it. Samuel Adams was the son of a church deacon, a successful merchant, and a driving force in 1750s Boston politics. Benjamin Franklin apprenticed under his brother, a printer, and eventually went on to take up the trade, running multiple newspapers over his lifetime. In 1765, Samuel Adams falsely painted Thomas Hutchinson as a supporter of the Stamp Act in the press, leading a mob of arsonists to burn down Hutchinson’s house. Meanwhile, Franklin created a counterfeit newspaper claiming the British paid Native Americans to scalp colonists which he then circulated in Europe to further the American cause.

Soon, other media emerged to broadcast ideas. By the 1930’s, radio was a powerful conduit for culture and news, carrying both current events and unique entertainment designed for the specific constraints of an audio-only format. Radio could move over vast distances, and it did so at the speed of light. At the same time, radio required specialist engineers to operate and maintain the expensive equipment needed to transmit its payload. It required more specialists to select and play the content people wanted to hear.

Television emerged using similar technology, with additional overhead. As well as all the work needed for transmission, television required additional specialists and elaborate equipment to capture a moving image.

Radio and television both operated over electromagnetic spectrum which was prone to interference if not carefully managed. By necessity spectrum is regulated, which creates scarcity, making the owners of broadcast companies powerful arbiters of the collective narrative.

So between print, radio and television, a handful of corporations determined what was true, what would be shared with the masses, and who was allowed to be part of the process.

Force multipliers in communication

Eventually, innovations in the technologies above began to cannibalize and build off of one another, helping the already declining cost of information transfer fall even faster.

By the late 1800’s, typewriters allowed faster composition of the written word and clearer interpretation for the recipient. By the late 1970’s, the electronic word processor used integrated circuits and electromechanical components to provide a digital display, allowing on-the-fly editing of text before it was printed.

Then, the 1980’s saw the rise of the personal computer, which absorbed the single use device of the word processor, folding it in and making it just another software application. For the first time, the written word was now a stream of instantly visible, digital text, making the storage and transmission of thoughts and ideas easier than ever.

Alongside the PC, the emergence of packet-switched networks opened the door to fully-automated computer communications. This formed the backbone of the early internet, and services ranging from chat to newsgroups to the web.

The arrival of the open source software revolution around the year 2000 enabled unprecedented productivity for software teams. By making the building blocks of web software applications free and modifiable to anyone, small teams could move quickly from concept to execution without having to sink time into the basic infrastructure common to any site. For example, in 2004, Facebook was built in a dorm room using Linux as the server operating system, Apache as its web server, MySQL for its database, and php as its server-side programming language. Facebook helped usher in the current era of centralized, corporate-controlled, modern social software, and it was built on the back of open source.

The pattern seen in the evolution from printing press to home PC is repeated and supercharged when we encounter the smartphone. By 2010, smartphones paired the ability to record audio and video with a constant internet connection. Thanks to the combination of smartphones and social software, everyday consumers were granted the ability to capture, edit and distribute media with the same global reach as CNN circa 1990. This had meaningful impact during the protests against police violence in Ferguson, Missouri, in 2014. Local residents and citizen journalists streamed real-time, uncut video of events as they unfolded—without having to consult any television executives.

In the end, this is a story of labor savings. Today, benefits from compounding automation and non-scarce information technology resources, like open source code, have collapsed the amount of human labor needed to reach mass audiences. An individual can compose and transmit content to an audience of millions in an instant.

This leverage for communication does not have a historical precedent.

Dissolving norms

As the cost of information transfer grows rapidly cheaper, structures and dynamics which once seemed solid have become vertiginously fluid.

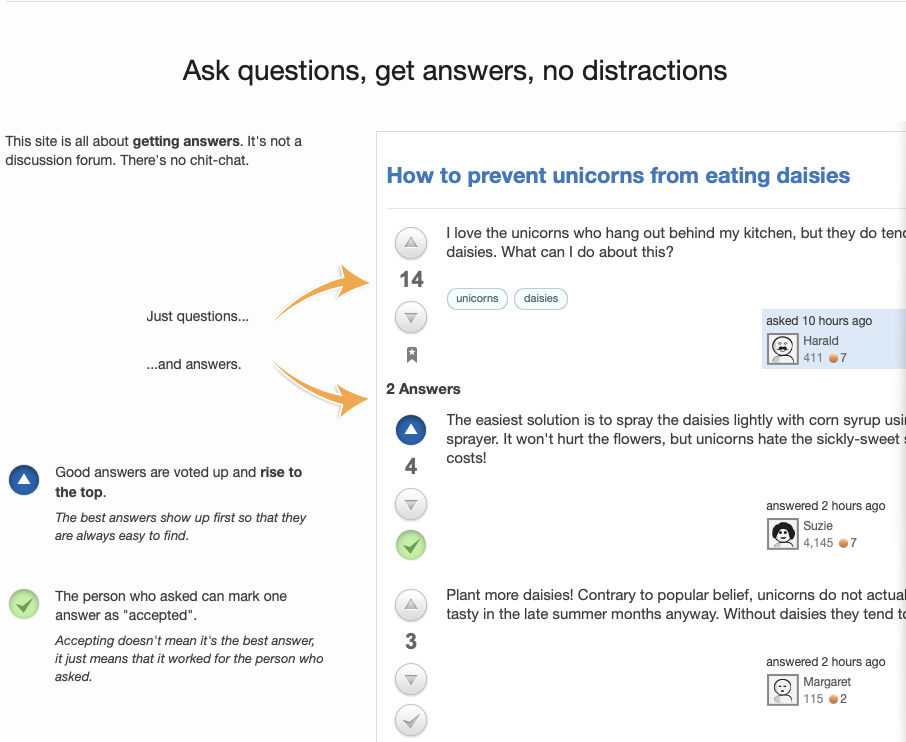

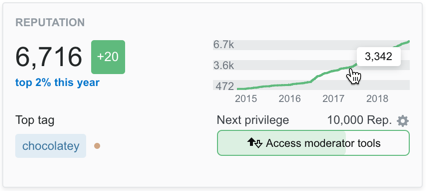

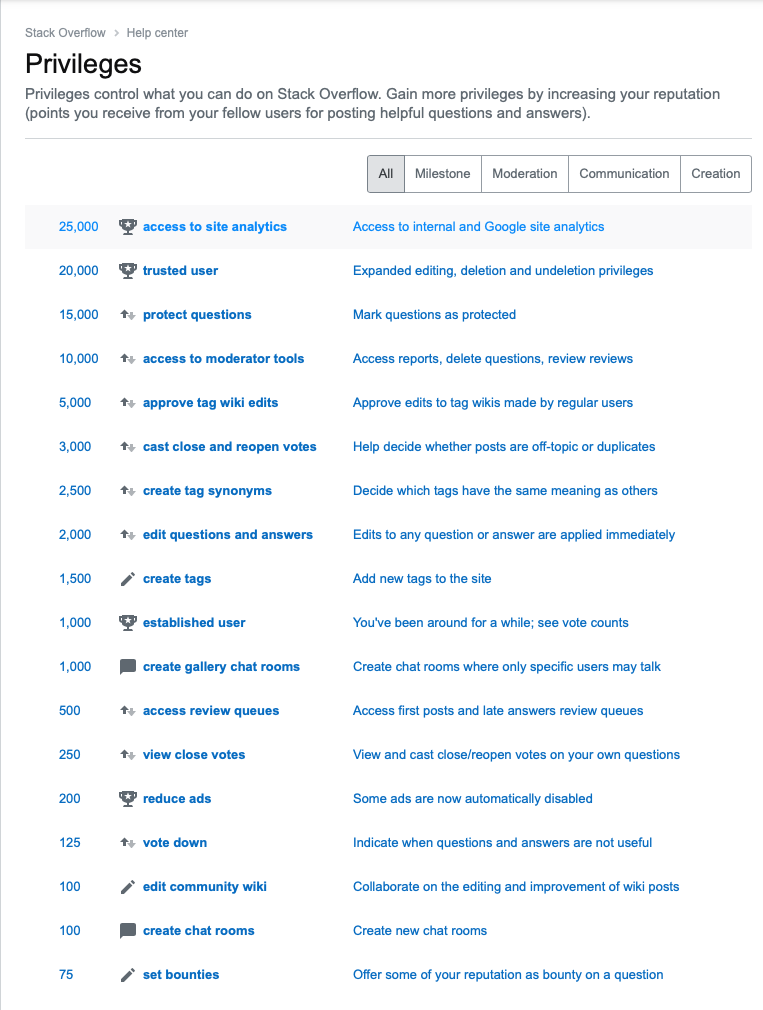

In the pre-internet age, you had producers and you had consumers. Today, large-scale social platforms are simultaneously media channel and watering hole, and power users may shift between being both producer and consumer in a single session. The distinction between one-to-one and one-to-many communication has also become far less clear. A broadcast-style message may result in a public response from a passerby which catalyzes an interaction between the passerby and the original poster, with lurkers silently watching the exchange unfold. Later, the conversation may be resurfaced and re-broadcast out by a third party.

The intent of our communications also aren’t always fully known to us when we enact them, and the results can be disorienting. We’ve become increasingly accustomed to mumbling into a megaphone, and people may face lasting consequences for things they say online. Ease of distribution has also blurred the lines between public and private communication. In the past, even the act of writing a letter to a single individual involved significant costs and planning. Today, the effort required for writing a letter and writing an essay seen by millions is functionally identical—and basically free.

Meanwhile, professional broadcast networks are no longer the final arbiters of our collective narrative. Journalism used to be the answer to the question “How will society be informed”? In a world of television, radio and newspaper, those who controlled the exclusive organs of media decided what the audience would see, and therefore what it meant to be informed. Defining our shared narratives is now a collaborative process, and the question of what is relevant has billions of judges able to weigh in. Today we have shifted, according to An Xiao Mina, from “broadcast consensus to digital dissensus”.

Uncharted waters

In 2019, we face an inversion of the economics of information. When the ability to send a message is a scarce resource, as it was in 1787, you’re less likely to use those mechanisms to transmit trivial updates. Today, the extreme ease of information transfer invites casualness which begets the inconsequential. Swimming in these waters is leaving us open to far more noise masquerading as signal than in eras past.

Many of us can attest that the time between considering what we want to say and getting to say it has shrunk to minutes or seconds, and the messages we send are increasingly frequent and bite-sized, thought out on-the-fly. When this dynamic compounds over time and spreads across the human culture, with both individuals and institutions taking part, we find ourselves experiencing the cognitive equivalent of a distributed denial-of-service attack through an endless torrent of “news,” opinion, analysis and comment. Just ask the Macedonian teenagers making bank churning out fake news articles.

To make sense of this, we need new design patterns, technologies, narratives, and disciplines. The decline of broadcast consensus leaves us grappling with a painful loss of clarity, yet it simultaneously creates opportunities for voices who were missing in eras past. We’ve sailed off the side of the map, into waters not yet charted. Now, we’re called on to relearn how to navigate, even as our instruments are rendered useless. And we need all the help we can get.